Given the latest revelations about xAI’s Grok producing illicit content en masse, sometimes of minors, this feels like a timely overview.

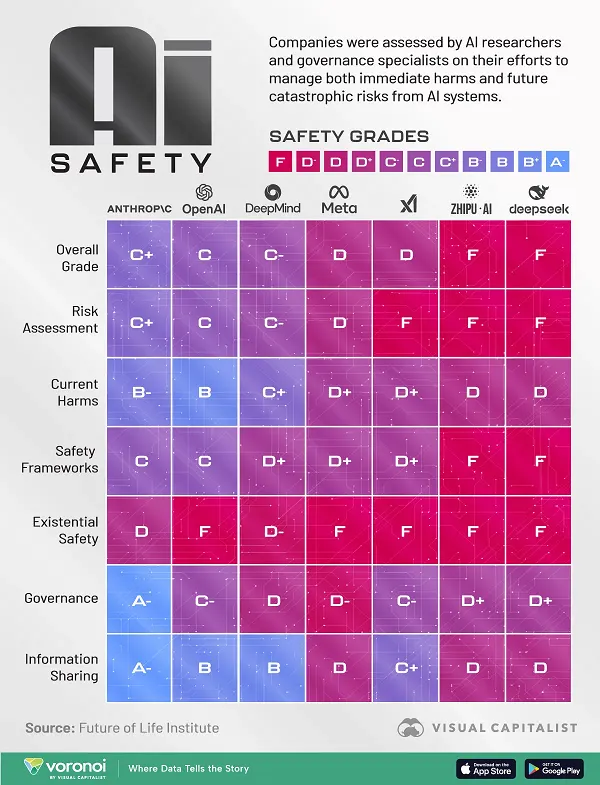

The team from the Future of Life Institute recently undertook a safety review of some of the most popular AI tools on the market, including Meta AI, OpenAI’s ChatGPT, Grok, and more.

The review looked at six key elements:

- Risk assessment – Efforts to ensure that the tool can’t be manipulated or used for harm

- Current harms – Including data security risks and digital watermarking

- Safety frameworks – The process each platform has for identifying and addressing risk

- Existential safety – Whether the project is being monitored for unexpected evolutions in programming

- Governance – The company’s lobbying on AI governance and AI safety regulations

- Information sharing – System transparency and insight into how it works