Recently, The New York Times broke a bombshell: YouTube has quietly relaxed its content review policy, and in some cases, even if a video violates the community guidelines, it may not be removed. This adjustment has attracted widespread attention from content creators, media observers, and ordinary users, and also heralds a major shift in the content supervision strategy of social platforms.

This article will explain to you the ins and outs of this policy, as well as how we, as viewers and creators, should respond to the changing content ecology.

What exactly has changed in YouTube's new policy?

According to the New York Times, YouTube has increased its tolerance for "public interest content" since December 2023 , as follows:

The violation tolerance threshold has been increased: In the past, a video would be deleted if more than 25% of its content violated the rules. Now the proportion has been increased to 50%.

Recently, The New York Times broke a bombshell: YouTube has quietly relaxed its content review policy, and in some cases, even if a video violates the community guidelines, it may not be removed. This adjustment has attracted widespread attention from content creators, media observers, and ordinary users, and also heralds a major shift in the content supervision strategy of social platforms.

This article will explain to you the ins and outs of this policy, as well as how we, as viewers and creators, should respond to the changing content ecology.

What exactly has changed in YouTube's new policy?

According to the New York Times, YouTube has increased its tolerance for "public interest content" since December 2023 , as follows:

The violation tolerance threshold has been increased: In the past, a video would be deleted if more than 25% of its content violated the rules. Now the proportion has been increased to 50%.

Free expression first: If content is deemed to have "high free speech value", it can remain on the platform even if it poses risks.

Gray area reporting and processing: Marginal content will no longer be deleted directly, but will be reported to higher-level reviewers.

EDSA protections are expanded: Even if a video touches on sensitive topics such as medical misinformation or hate speech, it may still be protected as long as it falls within the educational, documentary, scientific or artistic categories (EDSA).

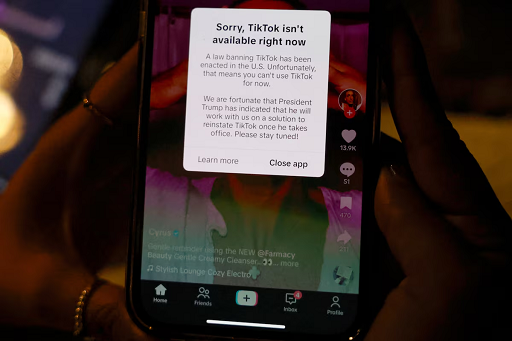

This change comes at a time when social platform policies are shifting to the right as a whole following the Trump administration's re-election. Many analysts believe that YouTube's move is an attempt to find a new balance between political pressure and the "public's right to know".

Why does YouTube do this?

1. Changes in the political environment: Trump was re-elected as US President, and criticism of "excessive platform censorship" has become more acute. Both YouTube and Meta are facing regulatory pressure from the government, and even Google (YouTube's parent company) is facing antitrust lawsuits.

2. Changing public opinion trends : After the epidemic, voters have generally distrusted "mainstream information" and "government advice", and more and more people are calling for "doing their own research" and pursuing "diverse sources of information."

3. Changes in the creator ecosystem : the rise of long videos and podcasts. A video of several hours was removed due to an illegal clip, which caused dissatisfaction among a large number of creators. The platform tried to restore the "creative space".

What risks does this bring?

1. False information is rampant

Content such as medical conspiracy theories, claims of election fraud, and extreme political rhetoric has a greater chance of staying.

For example, a video of RFK Jr. attacking vaccines was left up only because the "public benefit outweighs the risk".

2. Social polarization is intensifying

The information gap between conservatives and liberals has further widened.

Social platforms can become "echo chambers" that reinforce individual biases.

3. Damage to the platform's reputation

Scientific research institutions and educational organizations may question the scientific nature and responsibility of the platform.

Advertisers may withdraw due to brand safety issues.

What should content creators do? (Tutorial section)

Although this policy change is complex, it is both an opportunity and a challenge for content creators. Here are some strategies you can adopt:

1. Reasonable use of the "EDSA exemption" principle

If your content involves sensitive topics, be sure to add:

Educational notes (citing academic materials, authoritative sources)

Documentary structure (presenting multiple perspectives)

Artistic expression (creative rather than simply inflammatory)

Use a neutral tone in your descriptions and avoid direct attacks on individuals or organizations.

2. Strengthen self-labeling and disclaimer

Include a disclaimer in your video or text: "The following content is for discussion purposes only and is not medical advice".

Guide the audience to look at information rationally and enhance your "credibility weight".

3. Prepare risk plans: diversify distribution strategies

Don't put all your eggs in one basket:

Videos are uploaded to Rumble, Bilibili, X (formerly Twitter) and other platforms simultaneously

Establish an independent official website and email subscription list to deal with sudden platform delisting

Risk warning: Not everything can be issued!

Although the policy is more relaxed, it does not mean that the following content can be published at will:

Spreading explicit false medical information

Explicitly attacks a specific group (e.g., race, gender, sexual orientation, etc.)

Incitement to violence, death threats (e.g. "send president X to the guillotine")

These contents are still within the scope of deletion, and are likely to be manually reported, have their account weight reduced, or even be blocked!

How should viewers identify whether information is true or false?

In an environment with an overabundance of content, ordinary users must have basic information identification capabilities. The following suggestions are applicable to daily web browsing:

1. Check the source of information

Does it come from an authoritative organization, mainstream media, or information publisher with a public background?

Are specific studies or data cited? Is this cross-validated?

2. Beware of emotional titles and words

Using titles such as "Shocking", "Exposure", "Hidden Truth", etc. often constitutes emotional manipulation.

Truly credible information tends to be rational, plain, and detailed rather than exaggerated.

3. Find opposing viewpoints

Deliberately search for reports that have a contrary stance to the content to determine whether the information is biased or missing.

Good information is "open to questioning" rather than a "one-sided" conclusion.

4. Do not forward blindly, and maintain delayed judgment

If a message makes you "immediately want to forward" or "feel emotional", press the pause button first.

The more the content makes you lose control of your emotions, the more it is worth it to calmly check it out.

Conclusion

YouTube's new policy highlights a new platform value: " Broader public discussion may be more important than perfect content cleanliness." But this also means that the information environment users face will be more complex, diverse, and even risky.

What do you think of YouTube's new review standards?