Meta announced the global expansion of its Teen Accounts feature to Facebook and Messenger, following an earlier rollout on Instagram and initial testing in the U.S., U.K., Australia, and Canada.

This move comes as concerns grow worldwide about the impact of social media on younger users' mental health and online safety. For parents and educators, Teen Accounts are not just a product update—they represent a vital tool to protect minors in today's digital world.

This article will break down what Teen Accounts are, how they work, and how families and schools can use them effectively.

What Are Meta Teen Accounts?

Teen Accounts are a dedicated mode designed for users aged 13–16. They include built-in protections that restrict unwanted contact, limit exposure to harmful content, and give parents oversight tools.

Meta announced the global expansion of its Teen Accounts feature to Facebook and Messenger, following an earlier rollout on Instagram and initial testing in the U.S., U.K., Australia, and Canada.

This move comes as concerns grow worldwide about the impact of social media on younger users' mental health and online safety. For parents and educators, Teen Accounts are not just a product update—they represent a vital tool to protect minors in today's digital world.

This article will break down what Teen Accounts are, how they work, and how families and schools can use them effectively.

What Are Meta Teen Accounts?

Teen Accounts are a dedicated mode designed for users aged 13–16. They include built-in protections that restrict unwanted contact, limit exposure to harmful content, and give parents oversight tools.

Meta launched the feature in 2023 after facing scrutiny from lawmakers and safety advocates, who criticized social platforms for not doing enough to protect young people. With the expansion to Facebook and Messenger, the protections now cover a much broader audience.

Key Features of Teen Accounts

Meta has embedded multiple safeguards into Teen Accounts to balance usability with safety. These include:

Content Filtering: Sensitive or inappropriate recommendations are reduced.

Messaging Controls: Teens can only receive direct messages from people they already follow or have interacted with.

Privacy Settings: Only friends can view or reply to teens' Stories.

Interaction Limits: Tags, Mentions, and comments are restricted to friends or followers.

Usage Reminders: Teens receive notifications to take breaks after one hour of daily use.

Quiet Mode at Night: Accounts automatically switch to a low-distraction mode overnight.

Parental Permission: Users under 16 need approval from a parent to change core safety settings.

These measures are designed to help teens avoid harassment, maintain healthier screen-time habits, and stay safer online.

How Parents Can Use Teen Accounts

If your child uses Facebook or Messenger, here's how you can make the most of Teen Accounts:

Check the Age Setting: Accounts registered for ages 13–16 will automatically switch to Teen Accounts.

Connect Family Center: Meta's Family Center allows parents to link their child's account for better visibility.

Review Activity: Parents can monitor screen time and app usage patterns through the dashboard.

Customize Permissions: Messaging, comments, and tag controls can be adjusted to fit family rules.

Encourage Balance: Work with your teen to set healthy daily limits and offline routines.

Tip: Instead of banning social media altogether, use Teen Accounts as a teaching tool to build responsible digital habits.

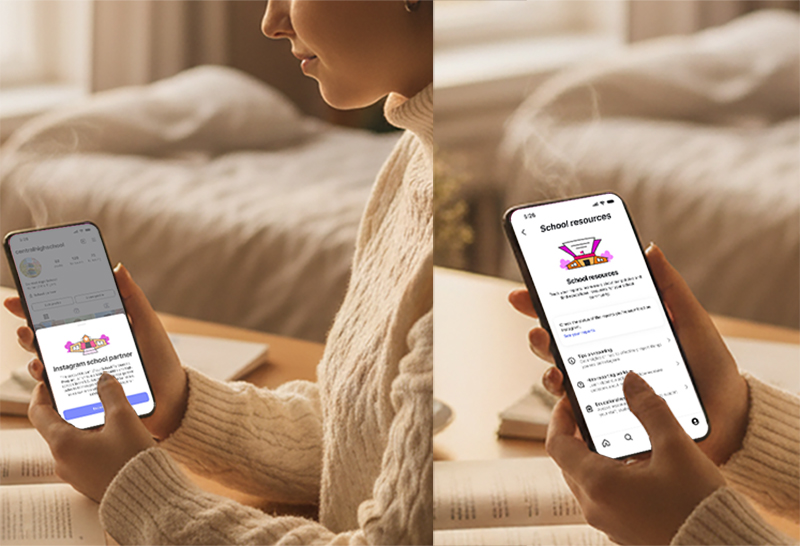

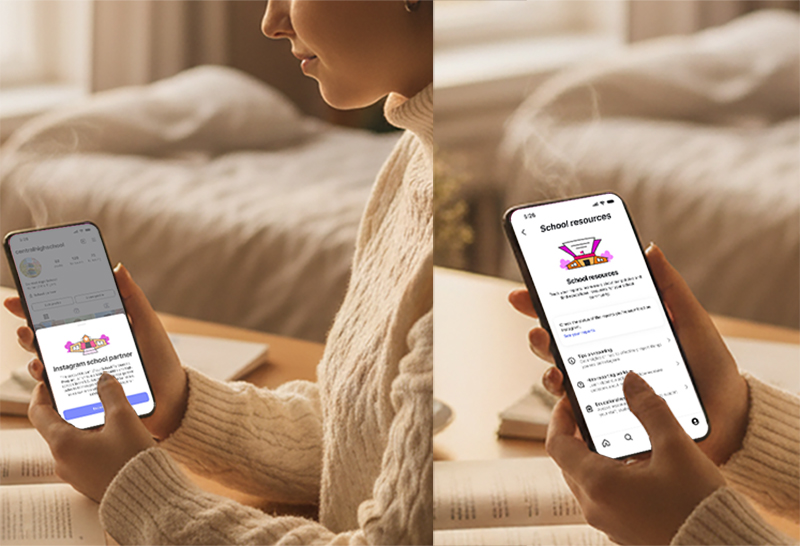

The School Partnership Program

Alongside Teen Accounts, Meta is expanding its School Partnership Program:

Educators can directly report safety issues—such as bullying or harassment—for faster review and removal.

All middle and high schools in the U.S. can now register for priority access and educational resources.

Participating schools will display an official partnership banner on Instagram to inform parents and students.

This initiative aims to strengthen collaboration between schools and platforms, ensuring harmful behavior is addressed quickly.

Why It Matters

Mental Health Risks: Research from the U.S. Surgeon General has highlighted links between heavy social media use and rising rates of teen anxiety and depression.

Legal Pressures: Several U.S. states are moving to restrict minors from using social platforms without parental consent.

Lingering Gaps: A recent whistleblower study suggested that harmful content—such as self-harm or sexual exploitation—can still surface, even with Teen Accounts. Meta disputes these findings, but they underline the importance of vigilance.

Teen Accounts provide a strong framework, but they are not a complete shield. Family involvement and school oversight remain essential.

FAQs About Teen Accounts

Q1: Will my teen's account automatically switch?

Yes. Users between 13–16 are placed into Teen Accounts by default.

Q2: Can teens older than 16 use this mode?

No, but they may still enable parental controls via Family Center if both parties agree.

Q3: What if my teen lies about their age?

Meta uses verification tools to flag suspicious accounts, but parents should check registration details directly.

Q4: Does Teen Accounts block all harmful content?

Not entirely, but it significantly reduces exposure to inappropriate or unsafe material.

Final Thoughts

Meta's global rollout of Teen Accounts is more than just a platform update—it reflects growing recognition of the risks minors face online.

For parents, the key step is to activate Family Center and use Teen Accounts as a collaborative tool, not just a restriction.

For educators, the School Partnership Program offers a chance to tackle issues like bullying with faster platform support.

In today's digital era, protecting teens online requires a joint effort from families, schools, and technology providers. Meta's Teen Accounts are a helpful step forward, but meaningful safety will always depend on guidance and communication at home and in classrooms.